VR Medical Education System

Interactive design of a virtual reality learning system based on thoracic surgery anatomy

Design Context

This project was a course extension, based on real-world issues with parking in a crowded office. With many employees and limited parking information, drivers often waste time searching for spaces. We aimed to create a system that helps drivers find parking faster and makes management easier for staff.

What We Did

- Built a real-time display showing how many spots are available and where they are.

- Designed a tool for security staff to check who used specific spots during the day.

- Showed daily parking data to help improve space use and management.

Research

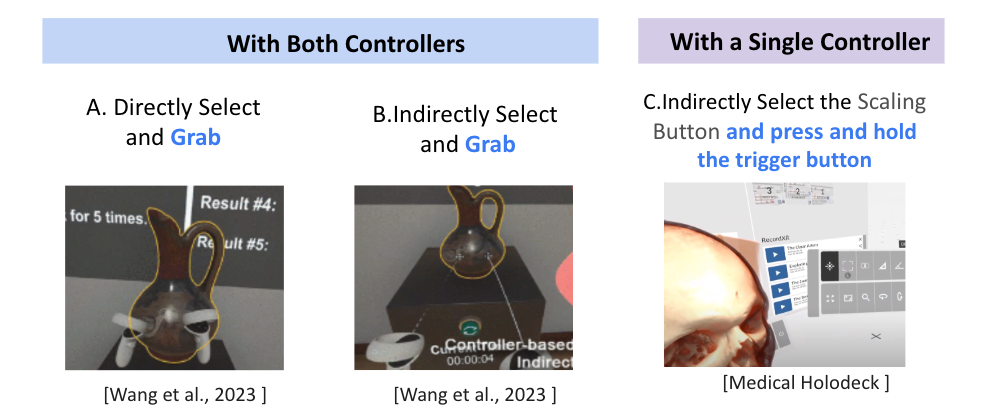

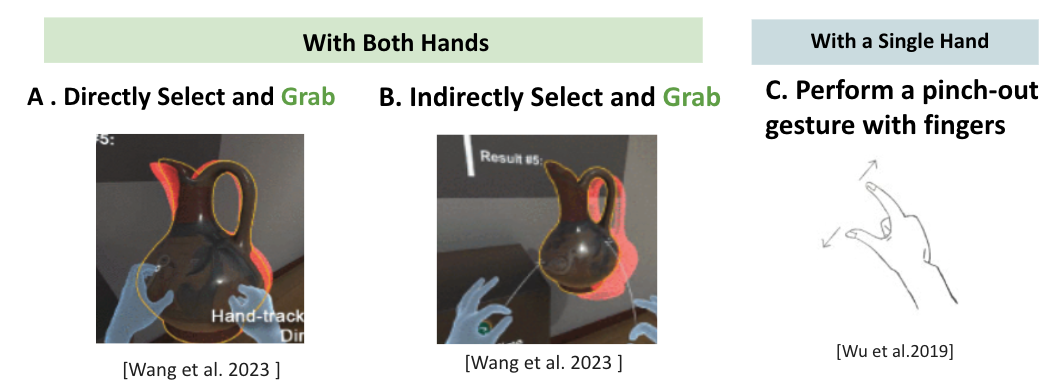

This research is divided into two parts: VR Controller-Based Interaction and Hand Gesture-Based Interaction. We analyzed common interaction methods used in current VR applications based on this classification.

Controller-Based VR Input Methods

Manipulating 3D Objects with Hand-Held Controllers

- Translation and Rotation: Users move the selected object by physically moving the controller, which changes both its position and orientation.

- Scaling: Users adjust the size of the object by moving the controller(s) along a specific axis.

Controller-Based VR Input Methods

Manipulating 3D Objects with Hand-Held Controllers

- Translation and Rotation: Users move the selected object by physically moving the controller, which changes both its position and orientation.

- Scaling: Users adjust the size of the object by moving the controller(s) along a specific axis.

Design

This design phase includes User Flow Design and Hi-Fi Prototype. It is based on key needs found in user research. We use flow diagrams and clear visuals to help different users—like employees, visitors, and security staff—complete tasks easily. The goal is to make the system easy to use and the information easy to understand.

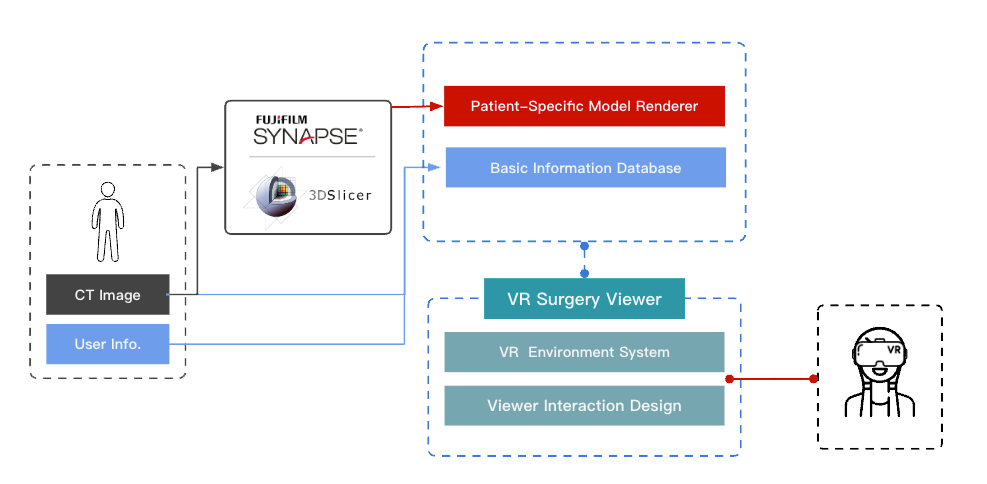

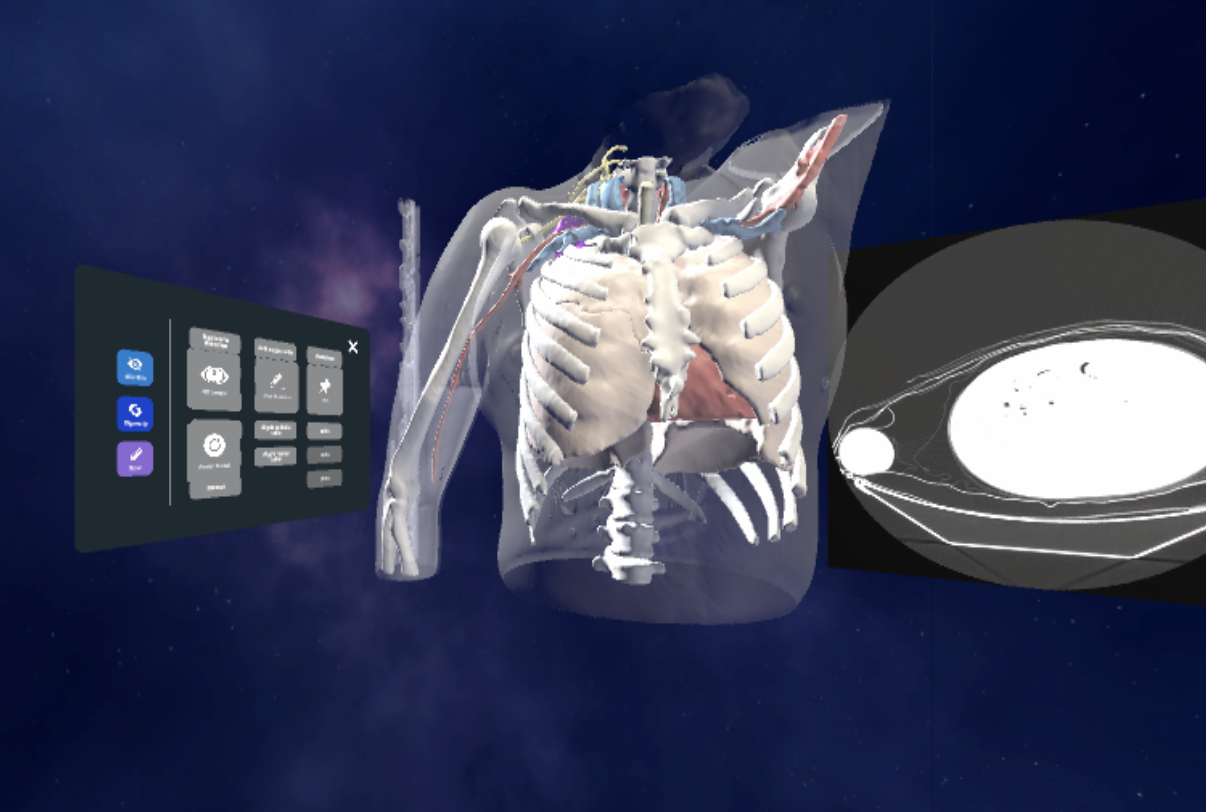

System Overall

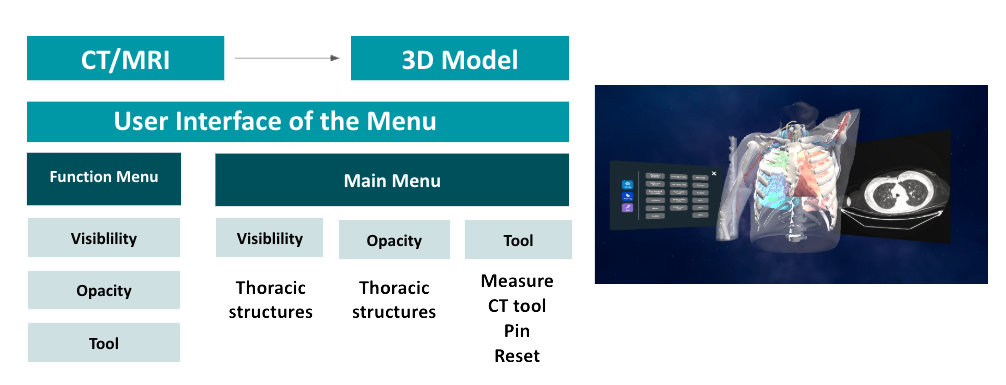

System Overall - Interface

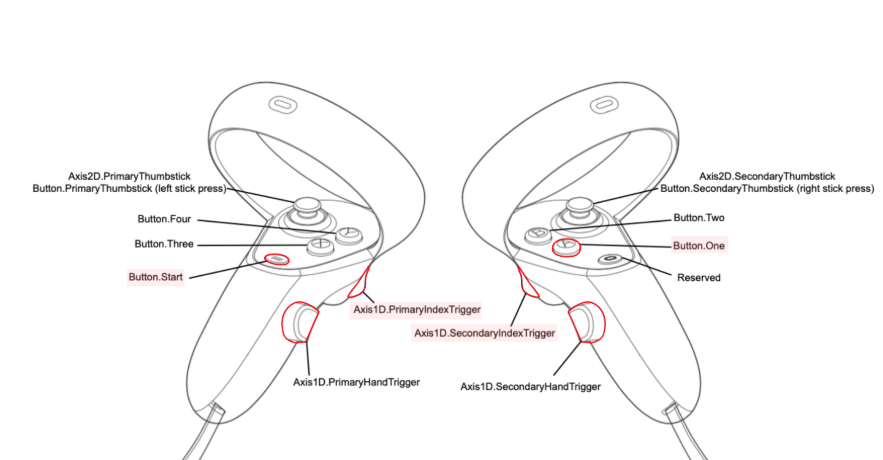

Controller Button Mapper :

- Direct Selection : Move the controllers close to the desired object.

- Indirect Selection : Move the controllers close to the desired object.

- Trigger : PrimaryIndexTrigger, or SecondaryIndexTrigger.

- Grab : PrimaryHandTrigger, or SecondaryHandTrigge.

- Menu : Button.Start.

- Measurment : Button.One.

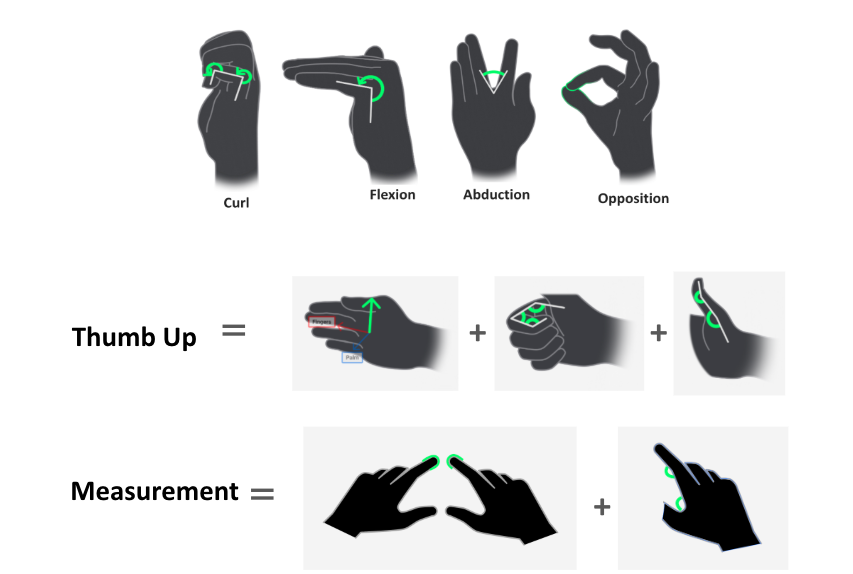

Hand Gestures :

- Direct Selection: : Move the hand(s) close to the desired object.

- Indirect Selection : Move the ray (or other indicator) close to the desired object. In the illustration, select the form with only points and no lines.

- Trigger : After selecting a desired object,pinch and then release.

- Grab : After selecting a desired object, pinch and hold .

- Bring up the Menu : Make a gesture with your left hand by thumb up.

- Measurment : Touch the index fingers together to start , Move your index fingers to draw the line segment, which will measure the distance.

Interaction Design - Manipulating 3D objects in VR :

Translation and Rotation

Directly Select and Grab

Translation and Rotation

Directly Select and Grab

Scaling

Directly Select and Grab with both controllers

Scaling

Choose to use Directly Select and Grab with both hands

Interaction Design - Interacting with Menu in VR :

Bring Up the Menu

Press the menu button on the left controller to bring up the menu.

Reposition the Menu

Directly or Indirectly select the edge of the panel and grab it.

Press the Menu Buttons

Directly Select or Indirectly Select and Trigger. Both are implemented and can be used in our UI.

Bring Up the Menu

Perform the Bring Up Menu gesture with your left hand to bring up the menu.

Reposition the Menu

Directly or Indirectly select the edge of the panel and grab it.

Press the Menu Buttons

Directly Select or Indirectly Select and Trigger. Both are implemented and can be used in our UI.

Interaction Design - Other Interactions in VR :

Measurement tool

Press the measurement button, then move the controller to draw the line and get the measurement value. Release to complete the measurement.

CT Viewer Tool

First, directly select and grab the red panel, and then move the controller vertically to view the corresponding CT image.

Model Material Replacement Tool

Indirectly select and trigger (or directly select) the button to change the model material.

Measurement tool

Perform the Measurement Gesture to start measuring the desired object. Trigger with right hand to complete the measurement.

CT Viewer Tool

First, directly select and grab the red panel, and then move the hand vertically to view the corresponding CT images.

Model Material Replacement Tool

Indirectly select and trigger (or directly select) the button to change the model material.

Testing

To ensure the usability and learning effectiveness of the virtual surgery training system, we conducted a structured user testing process combining both quantitative and qualitative methods. This approach allowed us to identify usability issues, measure task performance, and understand users' subjective experiences in a realistic VR environment.

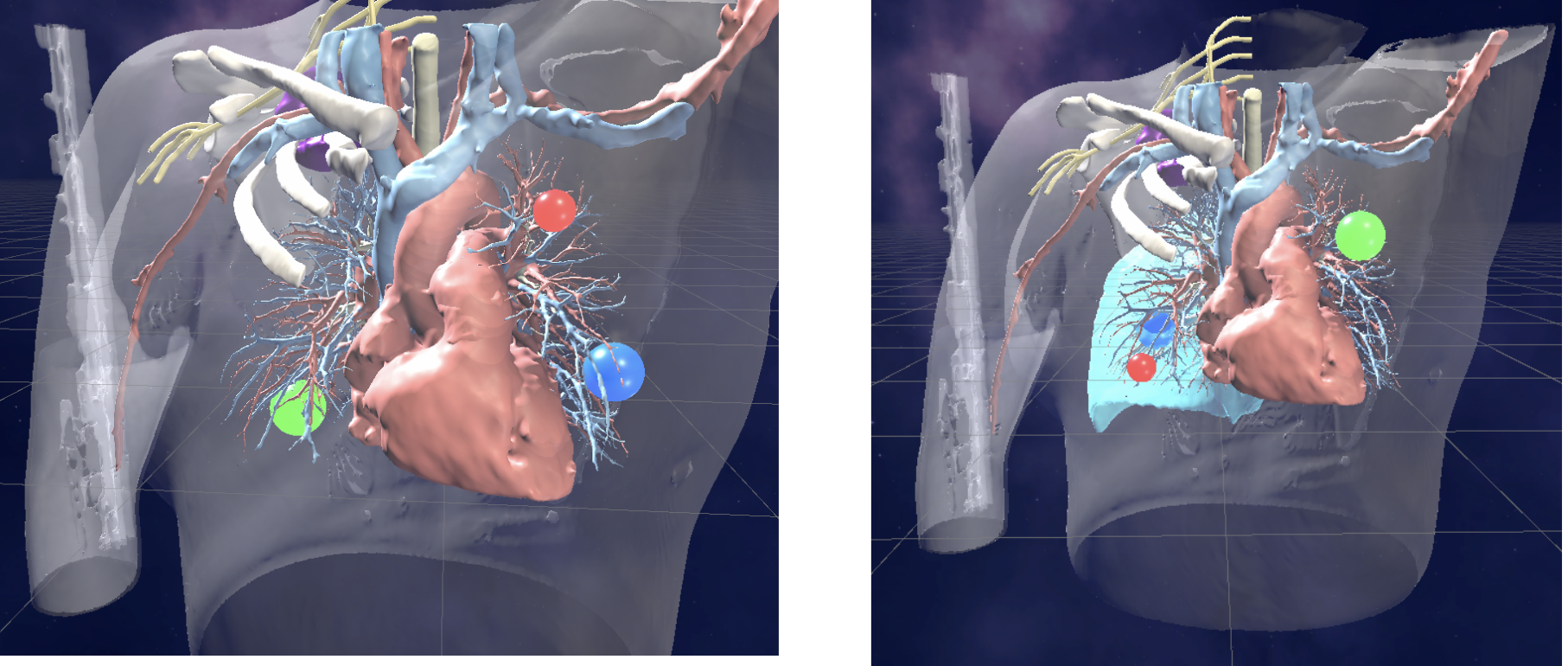

Quantitative Task-Based Testing

To evaluate the system's usability and learning support, I adopted a task-based quantitative testing approach. I designed several core tasks aligned with the learning objectives—such as identifying lesion locations, measuring distances, and completing specific operations. Participants completed these tasks in the VR environment, and we collected data including completion time, task accuracy, and error count.

Identifying Lesion Locations

Measuring Distances

Completing Operations

Subjective Evaluation and Interviews

In addition to performance metrics, I conducted subjective evaluations using the System Usability Scale (SUS) and the USEQ (User Satisfaction Evaluation Questionnaire) to capture users’ impressions of the interface and experience. Post-test interviews were also conducted to further understand users’ emotions, challenges, and feedback. These qualitative insights provided important direction for future design iterations.

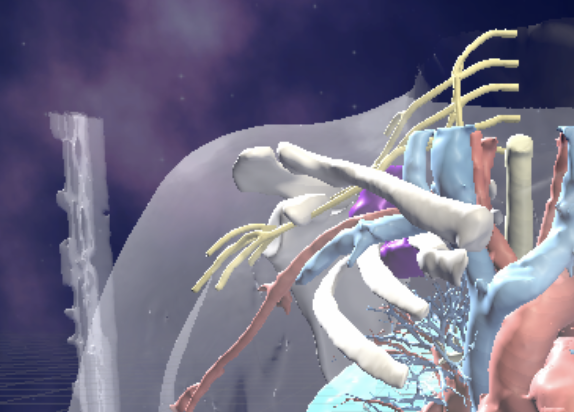

Reflection

This project showed us how VR can really enhance medical learning. Features like gesture controls, precise controller tools, CT viewing, and transparency adjustment all helped make the experience more engaging. Overall, users were satisfied with both input methods. Hand gestures felt natural, but there’s still room to improve accuracy, especially when it comes to measurements.

What’s Next?

Next, we plan to add assistive visuals or redesign the gestures to help users make more accurate measurements. We also want to allow separate manipulation of chest structures for more detailed interaction. In the future, we’ll include expert-guided sessions and replayable lessons to make the system even more complete.

Project Takeaways

- Combining gestures and controllers gives users flexibility and makes the experience more fun.

- Gestures are intuitive but need to be more precise for tasks like measuring.

- CT tools, rotation, and transparency all help users better understand the anatomy.

- User interviews revealed important issues and helped guide improvements.